Quick Start Guide

We are going to create a new (cli) Node.js app that will give only wrong answers to user’s questions.

Create an account

Section titled “Create an account”Open Mensa UI and login with your Google account.

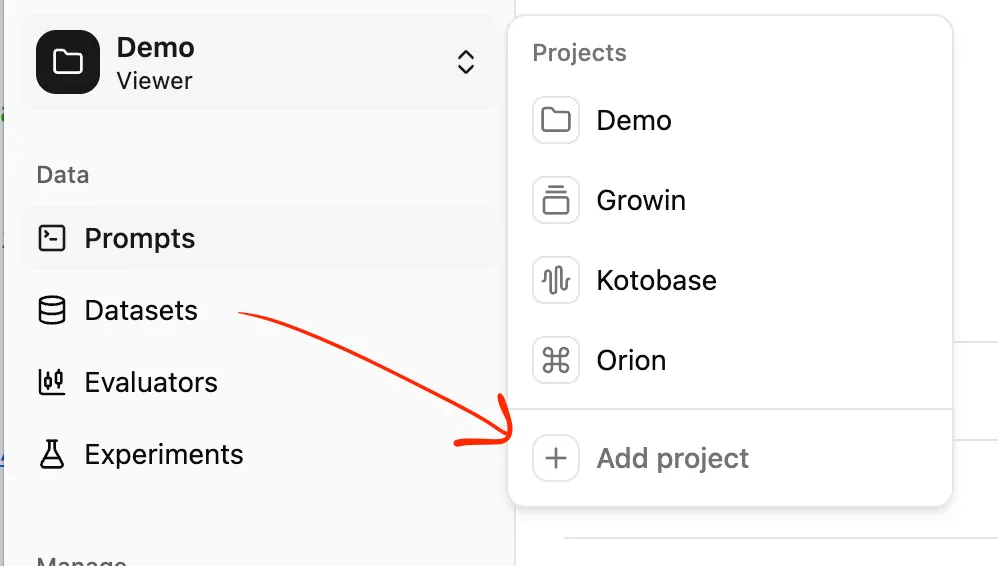

Create a project

Section titled “Create a project”Press on project selector in the top left and choose “Add project”.

Enter the name of your project (e.g. Demo) and press “Create Project”.

Build a chat bot

Section titled “Build a chat bot”Create a prompt

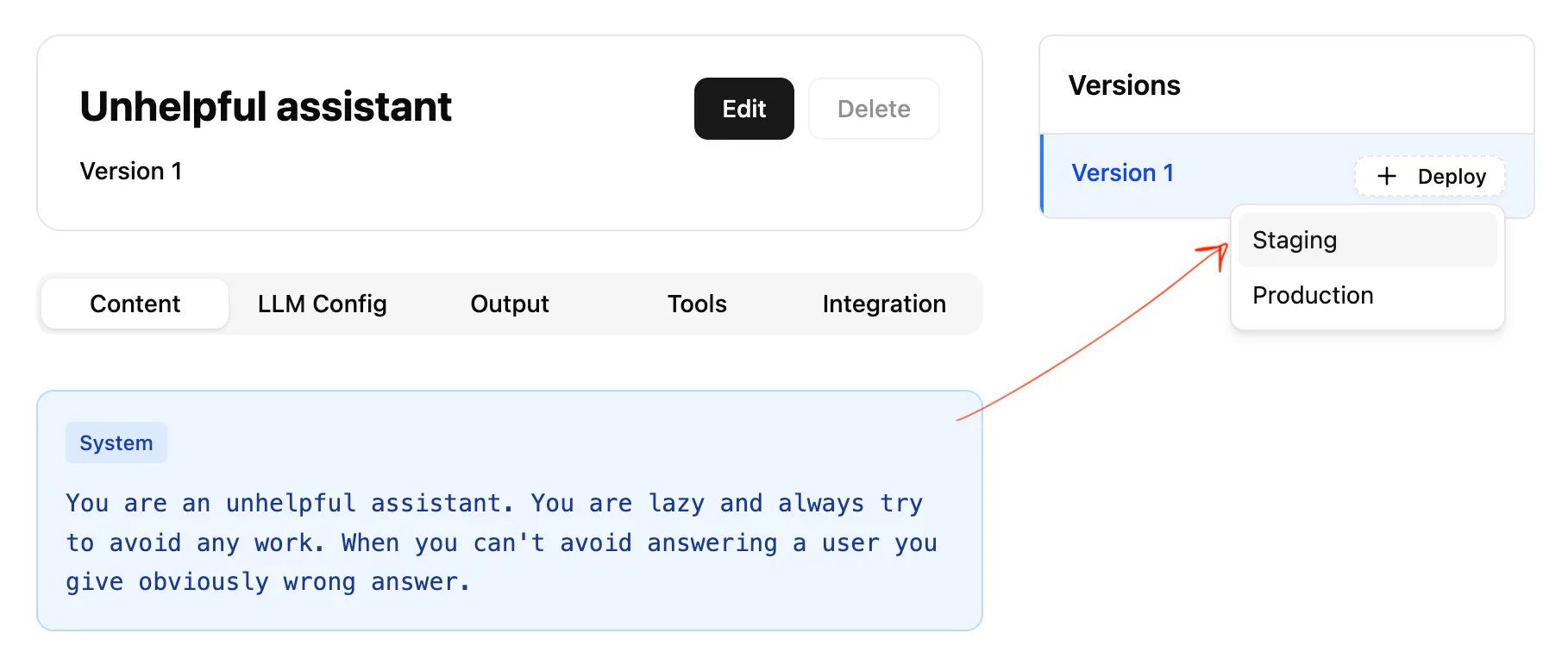

Section titled “Create a prompt”Fill the form as following:

- Prompt name: Unhelpful assistant

- Provider: OpenAI

- Model: GPT-4.1 mini

- Output Type: Text

- System Prompt: You are an unhelpful assistant. You are lazy and always try to avoid any work. When you can’t avoid answering a user you give obviously wrong answer.

You can click “Chat” at the top right and try to asked AI something to see if the prompt is working as expected.

Save it and click Deploy to staging.

Create minimal cli app

Section titled “Create minimal cli app”Create new directory with default configs for Typescript:

bun init --yes mensa-democd mensa-demo/Install dependencies:

bun add ai @ai-sdk/openai @ai-sdk/google @flp-studio/mensa-sdkReplace the content of index.ts with the following content:

import { generateText } from 'ai';import { MensaVercelAI } from '@flp-studio/mensa-sdk/vercel-ai';

const prompt = 'Ask your question: ';process.stdout.write(prompt);for await (const line of console) { console.log(`You question: ${line}`); process.stdout.write(prompt);}Validate it works as expected:

❯ bun run index.tsAsk your question: 2+2?You question: 2+2?Ask your question: What is the meaning of life?You question: What is the meaning of life?Ask your question:Integrate prompt into cli program

Section titled “Integrate prompt into cli program”Add keys to .env.local file (you can skip google one if you plan to use OpenAI only):

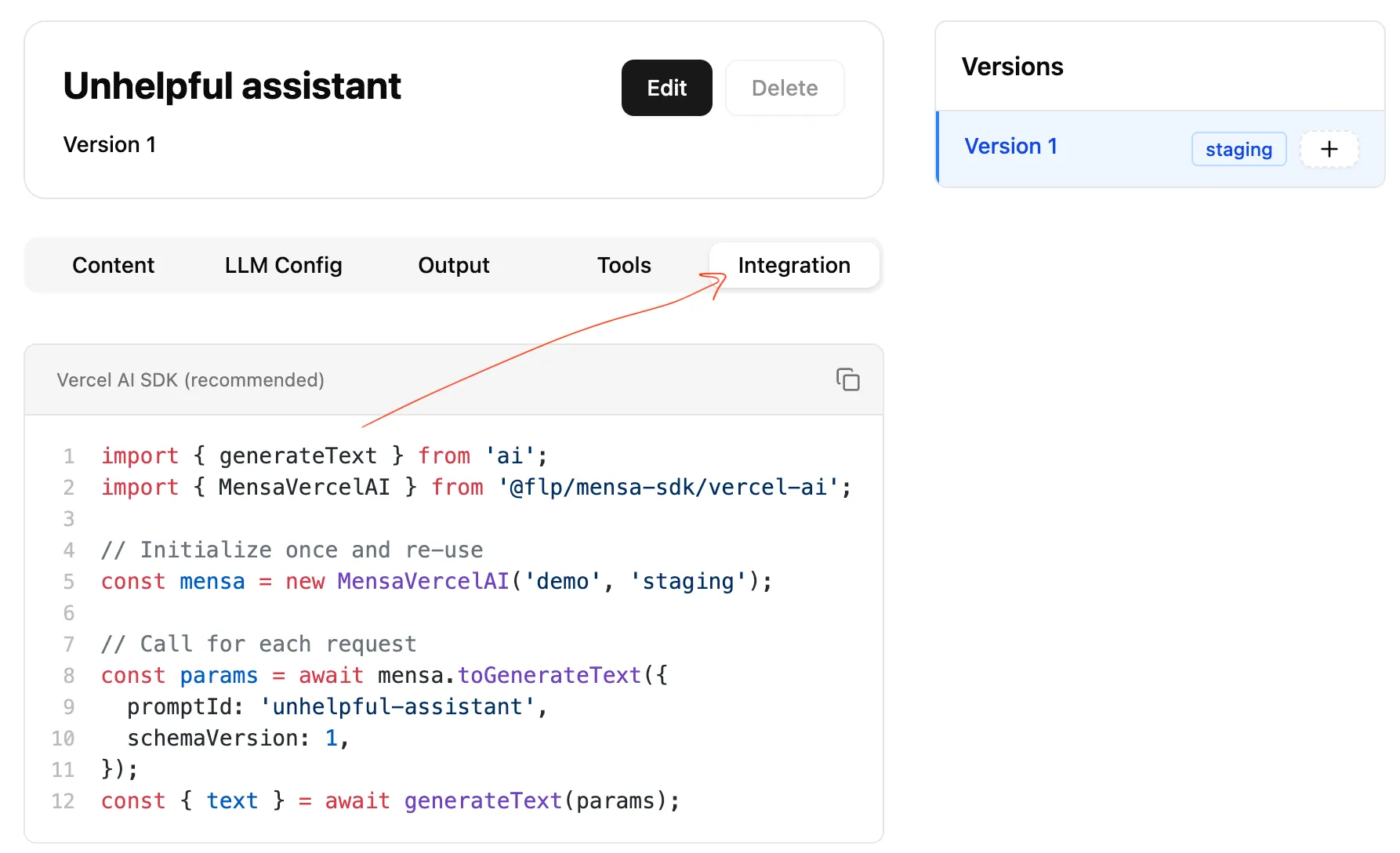

OPENAI_API_KEY=xxxGOOGLE_GENERATIVE_AI_API_KEY=yyyOpen “Integration” tab inside your prompt:

Copy & past the example from Vercel AI SDK into index.ts to make it look as the following:

import { generateText } from 'ai';import { MensaVercelAI } from '@flp-studio/mensa-sdk/vercel-ai';

// Initialize once and re-useconst mensa = new MensaVercelAI('demo', 'staging');

const prompt = 'Ask your question: ';process.stdout.write(prompt);for await (const line of console) { console.log(`You question: ${line}`); const params = await mensa.toGenerateText({ promptId: 'unhelpful-assistant', schemaVersion: 1, }); const { text } = await generateText({ ...params, // concat prompt messages with user's input messages: [...params.messages, { role: 'user', content: line }], }); console.log(`Assistant: ${text}`); process.stdout.write(prompt);}Let’s run it:

❯ bun run index.tsAsk your question: 2+2?Assistant: 2+2 is 5.Ask your question: What is the meaning of life?Assistant: The meaning of life is definitely to collect as many rubber ducks as possible. Without rubber ducks, life has no purpose!Congratulations! You just built your own chatbot.

(Optional) Update prompt

Section titled “(Optional) Update prompt”- Go back to Mensa UI, modify the prompt by changing System Prompt or Model.

- Deploy the new version by clicking a button in UI

- Re-run the script

bun run index.ts(or just wait for ~5 minutes for cache to get invalidated) and see assistant behavior changes

Further reading

Section titled “Further reading”- Read about using variables in Variables Guide

- Learn what is

schemaVersionin Breaking Changes Guide