Variables Guide

More often than not static prompts are not enough. Instead backend needs to modify the prompts on the fly with the data from a database, user request or env variables.

To solve this Mensa platform allows defining variables inside your prompts.

For example we are building a life coaching service and during the on-boarding user provides personal information, such as name, age, profession.

We can use it inside system prompt like this:

You are a professional life coach, you need to help user to achieve their personal and professional goals by identifying their strengths, conquering obstacles, and creating action plans for success.

Greet user by name: {userName}

User’s age: {userAge}

User’s profession: {userProfession}

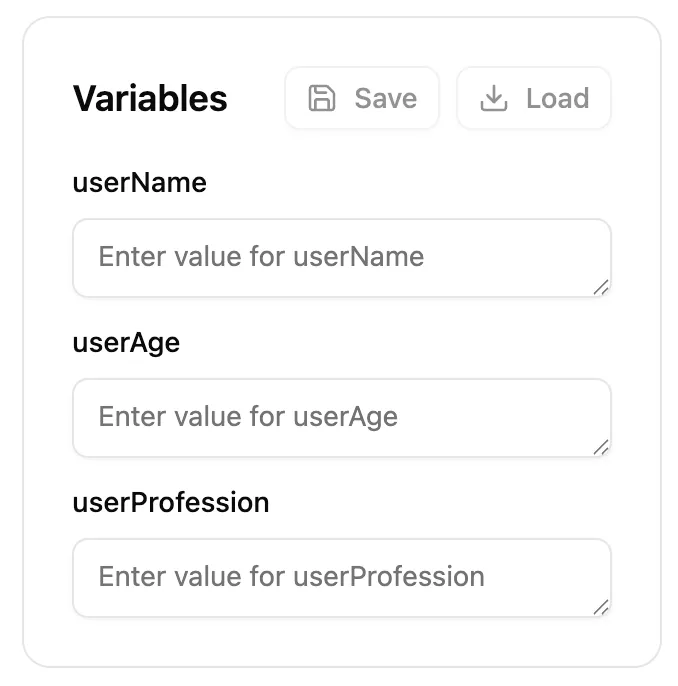

As soon as you type a word enclosed in curly braces it becomes a variable (or a placeholder) and appears in the right sidebar:

Fill in the values and variable names will be replaced with those values when executing a request to LLM.

You can save the values to Datasets to auto-fill them or quickly switch between few different sets of values.

When integrating the prompt you would need to set those values in SDK call:

const params = await mensa.toGenerateText({ promptId: 'prompt-with-variables', schemaVersion: 1, variablesMap: { userName: 'your-value', userAge: 'your-value', userProfession: 'your-value', },});Further reading

Section titled “Further reading”- Learn more about datasets in the Datasets Guide

- Understand what is considered Breaking Change and how to update your integration